Origins of Machine Learning

Machine learning and artificial intelligence were first used to play games! In 1952, Arthur Samuel, an IBM scientist who specialized in vacuum tubes and transistor design, wrote a computer program to play checkers against a human opponent. This was the first time a general purpose digital computer had been used for a non-arithmetic task, and represented the first AI program to run in the United States.

Samuel’s program used heuristics, but also “learnt” by adjusting weights on how much the program should rely on each of thirty measures of the strength of a particular checkers board. By refining these weights, the program slowly became a better player, ultimately beating the Connecticut state checker champion in 1961.

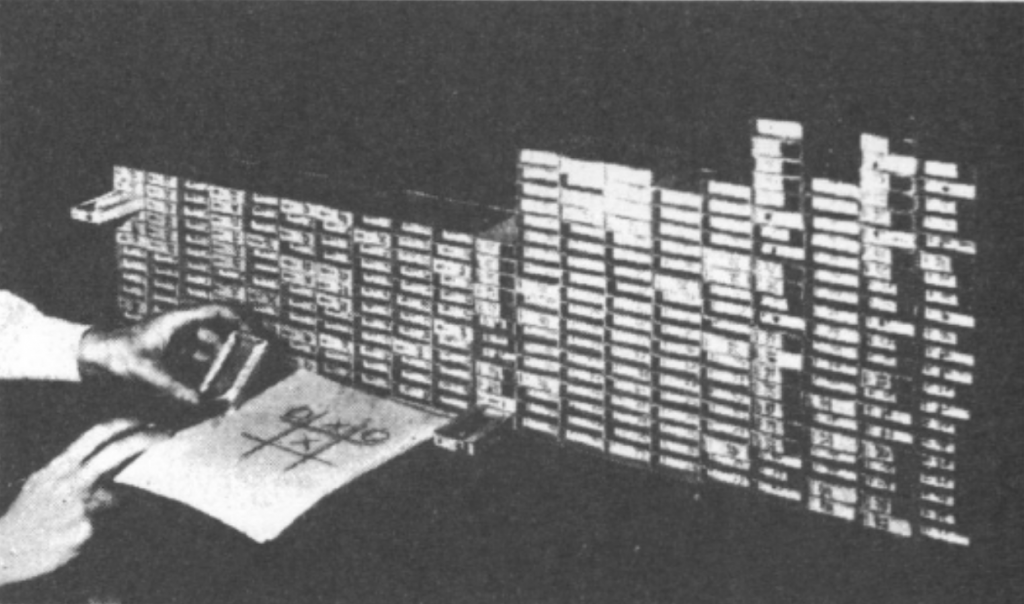

While Samuel had the power of IBM’s new digital computer, another founding father of machine learning was forced to use more modest tools. Donald Mitchie, a former collaborator of Alan Turning at Bletchley Park in WWII, was a professor of surgery at the University of Edinburgh. Without access to a digital computer, Mitchie built himself a machine which learned how to play Tic Tac Toe using 304 matchboxes. He called his machine Matchbox Educable Noughts and Crosses Engine, or MENACE.

MENACE

Here’s a picture of the original MENACE:

MENACE was first described in a paper by Mitchie, “Experiments on the mechanization of game-learning Part I. Characterization of the model and its parameters”, which appeared in the Computer Journal, in 1962.

MENACE’s 304 matchboxes corresponded to one of the logically equivalent board states we generated earlier in this website. In each matchbox was a set of colored beads; each color represented one of the possible legal moves that MENACE could play from this board state. In order to take the machine’s turn, the operator of the machine finds the matchbox for the current board, opens it and draws one bead out of the matchbox at random. This determines the move. The operator keeps the draw open and puts the chosen bead to one side.

The game continues like this until it hits a terminal state. If MENACE wins the game, the operator goes back to all the open matchboxes and adds three extra beads of the same color that was drawn from it. If MENACE draws the game, the operator goes back to all the open matchboxes and adds just one extra bead of the same color that was drawn from it. And if MENACE loses, the operator discards the colored beads. With one learning cycle complete, MENACE and the human opponent continue playing.

The idea is that on each training game, moves which ultimately led to a win are reinforced by increasing the probability that those moves will be taken for the given game state. Similarly, if MENACE draws, there’s some but not as much reinforcement. And if MENACE loses, those the probability of those moves is reduced. In this way, the machine gradually learns to play Tic Tac Toe!

Training MENACE

Mitchie’s original paper is not so clear on the exact training regime, but a recent review article by Rodney Brooks reproduced MENACE in software and trained MENACE with 4,000 games against three different player styles: (i) a completely random player (like the player in the introduction); (ii) a perfect player (i.e. MiniMax); (iii) a near-perfect player (i.e. Minimax + a random move 25% of the time). We saw the second and third playing styles in the MiniMax section.

After training, Brooks found the following results:

| Training \\ Opponent | Random Player | Perfect Player | Near-perfect Player |

| No Training | 59 / 13 / 28 | 0 / 24 / 76 | 27 / 19 / 53 |

| Random Player | 86 / 8 / 6 | 0 / 28 / 72 | 50 / 20 / 30 |

| Perfect Player | 71 / 15 / 14 | 0 / 100 / 0 | 38 / 48 / 14 |

| Near-perfect Player | 90 / 8 / 2 | 0 / 99 / 1 | 56 / 42 / 2 |

Each row corresponds to a training regime. Each column corresponds to an opponent. Each cell shows the percentage of wins / draws/ losses.

You can see Brooks confirmed the result we found earlier: in totally random play, the first player has a 59% chance of winning, a 13% chance of a draw and a 28% chance of a loss. Going across the first row, MENACE with no training will never win against a Perfect Player, and will win 27% of the time against the Near-perfect Player.

If trained against a Random Player, the machine learning sees enough wins that it does quite well against a Random Player and a Near-perfect Player, but does almost no better than a random player against the Perfect Player.

When trained against the Perfect Player, MENACE learns how to draw! But it doesn’t do that well against a Near-Perfect Player as the machine learning has simply never been exposed to a winning game.

MENACE does best when trained with the Near-perfect Player. In this training regime, the algorithm is exposed to wins, losses and draws.

The bottom line: MENACE’s performance is very dependent on the training set!

Lessons Learnt

Running a reinforcement learning algorithm to play Tic Tac Toe demonstrates a number of important issues that arise in most machine learning use-cases. First, the learning algorithm is very prescribed: there are 304 matchboxes, and a total of 1087 different matchbox/color combinations (=number of logically equivalent board states * number of legal moves available to each). All the algorithm can do is adjust those 1087 parameters that were set up by the developer. The algorithm can’t “think outside the box” (pun intended).

Next, the training sets were large. In the results above, Brooks ran 4,000 games per training set. For Tic Tac Toe that’s not so bad. But for other examples, there may not be enough training data to be useful. And related to that, we saw that the training set was very important, and defines how well the algorithm will perform in different situations. Another issue with this learning algorithm is that the way we implement the reinforcement is also important. Mitchie chose to credit a win with three extra beads, a draw with one extra bead, and a loss with the forfeit of the bead. What if those rules were different? That would be interesting to research, but my guess is that would also change the algorithm’s performance.